Memory Efficient Stereoscopy from Light Fields

C. Kim, U. Müller, H. Zimmer, Y. Pritch, A. Sorkine-Hornung, M. GrossProceedings of International Conference on 3D Vision (3DV) (Tokyo, Japan, December 8-11, 2014), pp. 73-80

Abstract

We address the problem of stereoscopic content generation from light fields using multi-perspective imaging. Our proposed method takes as input a light field and a target disparity map, and synthesizes a stereoscopic image pair by selecting light rays that fulfill the given target disparity constraints. We formulate this as a variational convex optimization problem. Compared to previous work, our method makes use of multi-view input to composite the new view with occlusions and disocclusions properly handled, does not require any correspondence information such as scene depth, is free from undesirable artifacts such as grid bias or image distortion, and is more efficiently solvable. In particular, our method is about ten times more memory efficient than the previous art, and is capable of processing higher resolution input. This is essential to make the proposed method practically applicable to realistic scenarios where HD content is standard. We demonstrate the effectiveness of our method experimentally.Overview

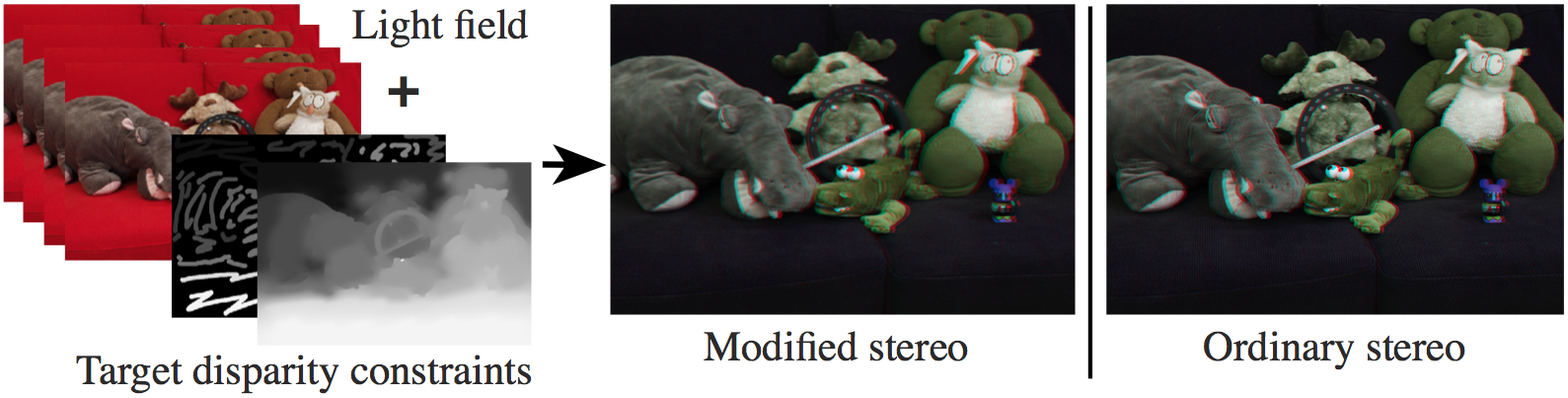

Our method takes a light field and target disparity constraints the user wants to achieve, and synthesizes the stereo pair which best fulfills the desired constraints. It allows the user to modify the depth perception in a more expressive and robust way. This example shows a use case based on scribbles, where the relative depths of the hippo and the bear are reversed; compare to the ordinary stereo pair formed of two views from the input light field.