On Multimodal Emotion Recognition for Human-Chatbot Interaction in the Wild

Nikola Kovacevic, C. Holz, M. Gross, R. WampflerProceedings of the 26th International Conference on Multimodal Interaction (ICMI) (San José, Costa Rica, November 04-08, 2024), pp. 12-21

Abstract

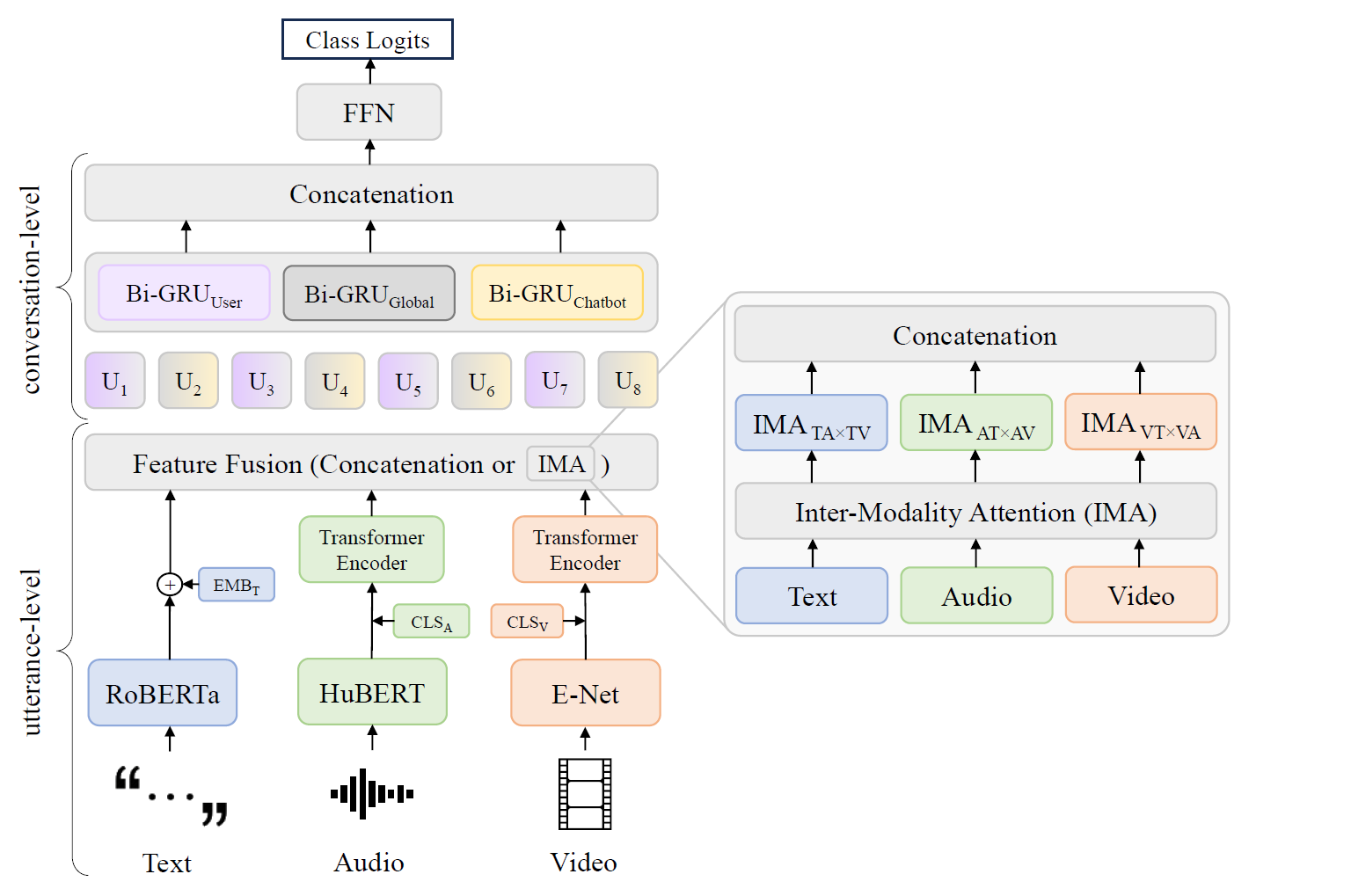

The field of natural language generation is swiftly evolving, giving rise to powerful conversational characters for use in different applications such as entertainment, education, and healthcare. A central aspect of these applications is providing personalized interactions, driven by the ability of the characters to recognize and adapt to user emotions. Current emotion recognition models primarily rely on datasets collected from actors or in controlled laboratory settings focusing on human-human interactions, which hinders their adaptability to real-world applications for conversational agents. In this work, we unveil the complexity of human-chatbot emotion recognition in the wild. We collected a multimodal dataset consisting of text, audio, and video recordings from 99 participants while they conversed with a GPT-3-based chatbot over three weeks. Using different transformer-based multimodal emotion recognition networks, we provide evidence for a strong domain gap between human-human interaction and human-chatbot interaction that is attributed to the subjective nature of self-reported emotion labels, the reduced activation and expressivity of the face, and the inherent subtlety of emotions in such settings, emphasizing the challenges of recognizing user emotions in real-world contexts. We show how personalizing our model to the user increases the model performance by up to 38% (user emotions) and up to 41% (perceived chatbot emotions), highlighting the potential of personalization for overcoming the observed domain gap.