FreeCam: A Hybrid Camera System for Interactive Free-Viewpoint Video

C. Kuster, T. Popa, C. Zach, C. Gotsman, M. GrossProceedings of Vision, Modeling, and Visualization (VMV) (Berlin, Germany, October 4-6, 2011), pp. 17-24

Abstract

We describe FreeCam - a system capable of generating live free-viewpoint video by simulating the output of a virtual camera moving through a dynamic scene. The FreeCam sensing hardware consists of a small number of static color video cameras and state-of-the-art Kinect depth sensors, and the FreeCam software uses a number of advanced GPU processing and rendering techniques to seamlessly merge the input streams, providing a pleasant user experience. A system such as FreeCam is critical for applications such as telepresence, 3D video-conferencing and interactive 3D TV. FreeCam may also be used to produce multi-view video, which is critical to drive new-generation autostereoscopic lenticular 3D displays.Overview

Free-viewpoint video is an area of active research in computer graphics. The goal is to allow the viewer of a video dataset, whether recorded or live, to move through the scene by freely and interactively change his viewpoint, despite the footage being recorded by just a small number of static cameras. Potential applications can be easily imagined in the sports, gaming, entertainment, and defense industries. Most prominent are telepresence, interactive TV and immersive video-conferencing, which has emerged as a key Internet application with the now widespread use of commercial voiceand- video-over-IP systems, such as Skype.

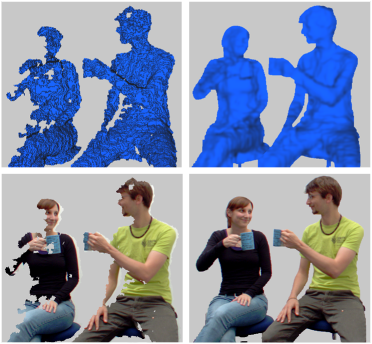

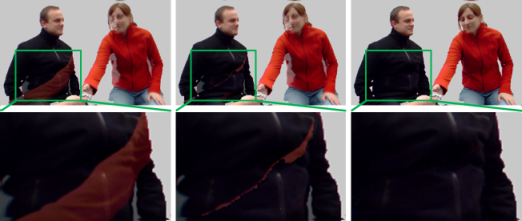

FreeCam is a hybrid system for free-viewpoint video based on both color and depth video cameras. The FreeCam hardware consists of three color video cameras and two depth cameras. Combining the data streams seamlessly to synthesize a novel view of a general scene is a significant challenge, and multiple issues, such as sensor noise, missing data, coverage, seamless data merging and device calibration require non-trivial processing to generate quality artifact-free imagery. It is all the more challenging to perform all this at real-time rates.

Results

FreeCam provides live free-viewpoint video at interactive rates using a small number of off-the-shelf sensor components and quite standard computing power. It is one of the first free-viewpoint systems to incorporate the new Kinect depth sensor, and we expect this trend to strengthen as the sensor evolves.