Gaze Correction for Home Video Conferencing

C. Kuster, T. Popa, J.C. Bazin, C. Gotsman, M. GrossProceedings of ACM SIGGRAPH Asia (Singapore, November 28 - December 1, 2012), ACM Transactions on Graphics, vol. 31, no. 6, pp. 174:1-174:6

Abstract

Effective communication using current video conferencing systems is severely hindered by the lack of eye contact caused by the disparity between the locations of the subject and the camera. While this problem has been partially solved for high-end expensive video conferencing systems, it has not been convincingly solved for consumer-level setups. We present a gaze correction approach based on a single Kinect sensor that preserves both the integrity and expressiveness of the face as well as the fidelity of the scene as a whole, producing nearly artifact-free imagery. Our method is suitable for mainstream home video conferencing: it uses inexpensive consumer hardware, achieves real-time performance and requires just a simple and short setup. Our approach is based on the observation that for our application it is sufficient to synthesize only the corrected face. Thus we render a gaze-corrected 3D model of the scene and, with the aid of a face tracker, transfer the gaze-corrected facial portion in a seamless manner onto the original image.Fast Forward

A short clip introducing the project (with audio).Overview

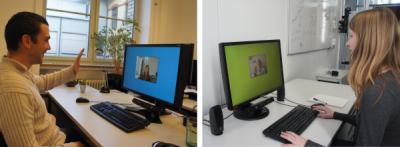

Mutual gaze awareness is a critical aspect of human communication. Thus, in order to realistically imitate real-world communication patterns in virtual communication, it is critical that the eye contact is preserved. Unfortunately, conventional hardware setups for consumer video conferencing inherently prevent this. During a session we tend to look at the face of the person talking, rendered in a window within the display, and not at the camera, typically located at the top or bottom of the screen. Therefore it is not possible to make eye contact.

In this paper we propose a gaze correction system targeted at a peer-to-peer video conferencing model that runs in real-time on average

consumer hardware and requires only one hybrid depth/color sensor such as the Kinect. Our goal is to perform gaze correction without damaging the integrity of the image (i.e., loss of information or visual artifacts) while completely preserving the facial expression of the person.

Results

The system runs at about 20 Hz on a consumer computer and the results are convincing for a variety of face types, hair-styles, ethnicities, etc. Below is a representative selection from our results. Top rows: original images from the real camera. Bottom: gaze corrected images obtained with our system.

Try it yourself!

Our Skype/Hangouts plugin is now available for download:

http://www.catch-eye.com