Spatio-Temporal Geometry Fusion for Multiple Hybrid Cameras using Moving Least Squares Surfaces

C. Kuster, J.C. Bazin, T. Deng, C. Oztireli, T. Martin, T. Popa, M. GrossProceedings of Eurographics (Strasbourg, France, April 7-11, 2014), Computer Graphics Forum, vol. 33, no. 2, pp. 1-10

Abstract

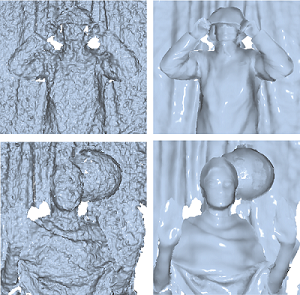

Multiview reconstruction aims at computing the geometry of a scene observed by a set of cameras. Accurate 3D reconstruction of dynamic scenes is a key component in a large variety of applications, ranging from special effects to telepresence and medical imaging. In this paper we propose a method based on Moving Least Squares surfaces which robustly and efficiently reconstructs dynamic scenes captured by a set of hybrid color+depth cameras. Our reconstruction provides spatio-temporal consistency and seamlessly fuses color and geometric information. We illustrate our formulation on a variety of real sequences and demonstrate that it favorably compares to state-of-the-art methods.Overview

Accurate reconstruction of dynamic scenes is a key component in a large variety of applications, ranging from special effects to telepresence and medical imaging. Recently, the emergence of low-cost hybrid color+depth cameras, such as the Kinect, paved the way to real-time geometry acquisition. On the downside, geometric data from such hybrid cameras is often of low quality, suffering from severe spatial as well as temporal artifacts. Moreover, these devices only capture 2.5D geometry (depth maps). In order to acquire the full 3D geometry of a scene over time, multiple devices need to be used and their data fused into one coherent representation.

We propose a fusion method for multiple color+depth cameras based on point based surfaces and a Moving Least Squares (MLS) reconstruction technique. We adapt and extend current MLS reconstruction techniques for efficient, accurate and robust reconstruction that can naturally handle surface discontinuities and consistently incorporate color and temporal information.