Automatic Jumping Photos on Smartphones

C. Garcia, J.C. Bazin, M. Lancelle, M. GrossProceedings of International Conference on Image Processing (ICIP) (Paris, France, October 27-30, 2014), pp.

Abstract

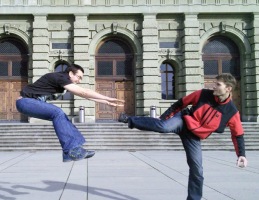

Jumping photos are very popular, particularly in the contexts of holidays, social events and entertainment. However, triggering the camera at the right time to take a visually appealing jumping photo is quite difficult in practice, especially for casual photographers or self-portraits. We propose a fully automatic method that solves this practical problem. By analyzing the ongoing jump motion online at a fast rate, our method predicts the time at which the jumping person will reach the highest point and takes trigger delays into account to compute when the camera has to be triggered. Since smartphones are more and more popular, we focus on these devices which leads to some challenges such as limited computational power and data transfer rates. We developed an Android app for smartphones and used it to conduct experiments confirming the validity of our approach.Overview

We track the jumping person's face and recognize a typical pattern when gathering momentum. This enables to detect when the person is in the ballistic phase, i.e. not touching the ground. With several frames we estimate the ballistic trajectory and predict the highest point. As long as there is enough time, more measurements are taken to refine the estimate. Finally, the camera is triggered in time, taking the trigger delay into account.

Results

Our approach is implemented as an Android app and deployed

on multiple smartphones. The user launches our app,

frames the shot via the displayed preview and then simply

needs to click on a “start” button. Then, the processing is

performed on low-resolution images in real-time and in an

automatic manner, and captures a high-resolution picture of

the jump. In case the face detection does not work, e.g. for

a person not directly facing the camera, the user can tap on

the smartphone screen to manually select the face or another

textured part of the jumping person that is then tracked automatically.

More than 70% of the tested sequences have

a temporal error of less than 40ms. It shows that, in practice,

despite potential noise sources, our

method can still estimate the triggering time accurately.