Multimodal Dialog Act Classification for Conversations With Digital Characters

Philine Witzig, R. Constantin, N. Kovacevic, R. WampflerProceedings of the 6th International Conference on Conversational User Interfaces (CUI) (Luxembourg, Luxembourg, July 08-10, 2024), pp. 1-14

Abstract

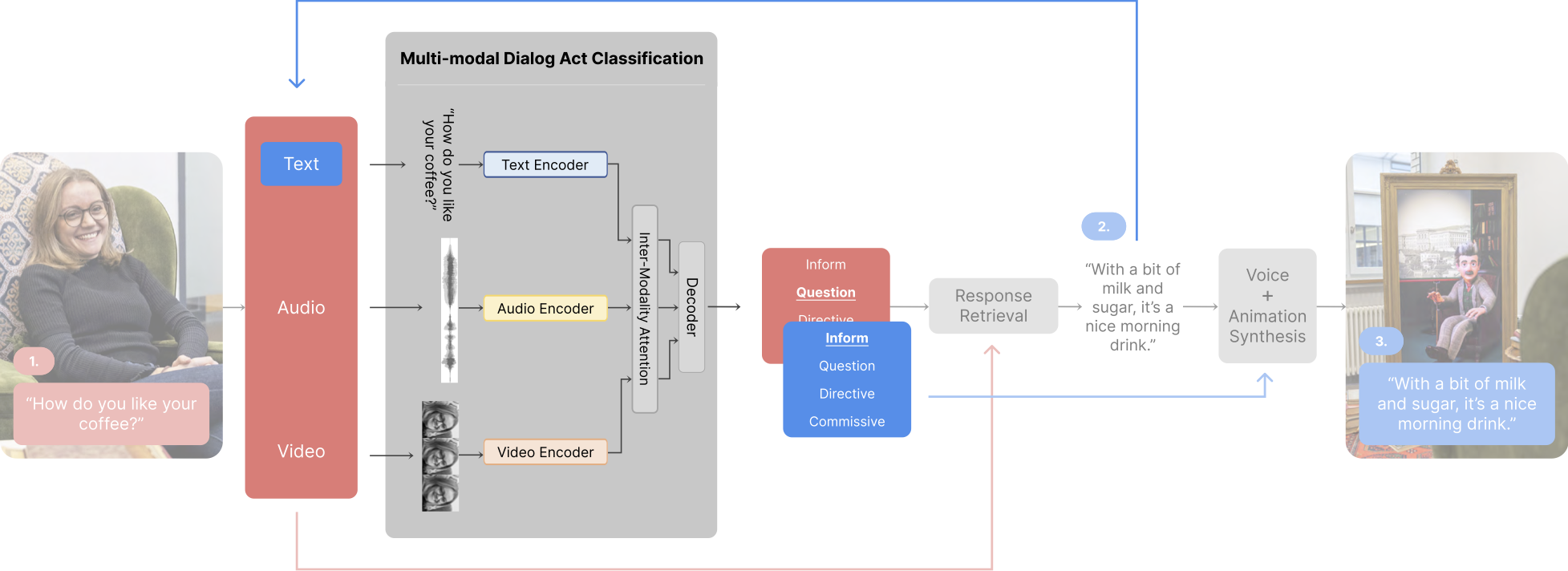

Dialog act classification is essential for enabling digital characters to understand and respond effectively to user intents, leading to more engaging and seamless interactions. Previous research has focused on classifying dialog acts from transcriptions alone due to missing multimodal data. We close this gap by collecting a new multimodal (i.e., text, audio, video) dyadic dialog dataset from 60 participants. Based on our dataset, we developed a novel multimodal Transformer-based dialog act classification model. We show that our model can predict dialog acts in real-time on four classes with a Macro F1 score up to 80.81, outperforming the unimodal baseline by 1.24%. Our analysis shows that the segments of a sentence associated with the highest acoustic energy are most predictive. By harnessing our new multimodal dataset, we pave the way for dynamic, real-time, and contextually rich conversations that enhance the experience of interactions with digital characters.}